AI Innovations Transforming Infrastructure Management Practices

Introduction and Outline: Why Infrastructure Needs AI Now

Infrastructure is under pressure from aging assets, climate volatility, rising demand, and constrained budgets. The traditional model—scheduled inspections, reactive repairs, and siloed control rooms—struggles to keep pace. Enter a trio of AI-aligned capabilities: automation that executes routine actions with reliable consistency, predictive analytics that turns data into foresight, and smart infrastructure that senses, connects, and adapts in near real time. Together, they form a practical stack that shortens response times, reduces waste, and extends asset life—without requiring wholesale reinvention on day one.

Think of this shift as moving from a detective story told in hindsight to a live broadcast with early warnings and automatic, safe adjustments. Pumps tune themselves before energy spikes, structures report unusual vibrations after a storm, and work orders flow to the right crews with verified parts availability. Evidence from sector-wide studies suggests that organizations adopting these approaches can cut unplanned downtime by double-digit percentages while shifting maintenance budgets toward planned activities. The aim is not to replace experienced operators; it is to amplify their judgment with timely signals and repeatable, transparent workflows.

This article follows a clear roadmap so you can match concepts to your current maturity and constraints:

– Section 1: Context and outline, clarifying why adoption is urgent and feasible now

– Section 2: Automation fundamentals, use cases, and measurable impacts on safety and cost

– Section 3: Predictive analytics methods, data requirements, and ROI guardrails

– Section 4: Smart infrastructure, from sensing to digital replicas, and how to integrate old and new

– Section 5: A practical implementation plan and a closing message for decision-makers

By the end, you will have a structured way to evaluate where to start, how to scale responsibly, and which indicators matter most. Keep an eye out for comparative notes—manual versus automated response, preventive versus predictive maintenance, and greenfield versus retrofit build-outs—because context determines the right tool for each job. Let’s turn abstractions into operational steps.

Automation: From Reactive Tasks to Reliable, Safe Execution

Automation in infrastructure blends control logic, workflow orchestration, and physical actuation to remove lag between detection and response. In a water network, for example, rule-based controls can stabilize pressure zones while advanced controllers trim energy use during peak tariffs. In transit depots, scheduling bots allocate vehicles and maintenance bays with minimal clashes, aligning staffing and spare parts with demand. Across utilities, evidence from independent benchmarking shows automated fault isolation and service restoration can shorten outage durations by 20 to 30 percent, especially when combined with well-tested switching sequences and safety interlocks.

To make sense of the landscape, consider four layers that often work together:

– Process automation: consistent execution of operating procedures, including start-up/shutdown sequences and alarm handling

– Workflow automation: routing of tasks, approvals, and documentation across operations, maintenance, and procurement

– Physical automation: drones, rovers, and stationary robots for inspection, cleaning, and repetitive field chores

– Decision automation: policies that trigger actions under known conditions, escalated to humans when uncertainty is high

Compared with manual routines, automation offers predictability. It reduces human exposure to hazardous sites, standardizes quality, and makes audit trails effortless. Yet it is not a magic switch. Risks include over-automation that hides failure modes, poorly tuned thresholds that cause alarm fatigue, and brittle integrations that break under edge cases. A pragmatic approach begins with well-scoped loops—think pump optimization, HVAC scheduling, or feeder switching—where guardrails are strong and impact is visible. Many operators report quick wins: lower energy use by single-digit percentages that add up over thousands of operating hours, fewer truck rolls because remote resets handle common faults, and improved mean time to respond when workflows auto-generate and prioritize tickets.

Automation also opens room for creativity. When nightly batch jobs prepare tomorrow’s schedules and materials lists, supervisors can focus on constraints that algorithms cannot see—unexpected community events, weather anomalies, or supplier delays. The most reliable programs are transparent: clear logic, version control, simulations before deployment, and periodic reviews with operators who can challenge assumptions. Measured this way, automation is a sturdier floor for operations rather than a ceiling on human expertise.

Predictive Analytics: Seeing Failures Before They Happen

Predictive analytics converts time-stamped data into early warnings and quantified risk, enabling teams to intervene at the right moment. It thrives on sensor streams, maintenance histories, environmental records, and operational logs. Techniques range from simple thresholds and moving averages to survival analysis, multivariate anomaly detection, and sequence models for complex equipment. The goal is not to forecast with absolute certainty but to rank attention intelligently, so scarce resources address the highest-value risks first.

Consider three common use cases. In electric distribution, models flag feeders whose load and temperature profiles suggest emerging insulation stress, prompting targeted inspection before faults cascade. In water networks, pressure transients and acoustic signatures hint at leak formation, guiding crews to narrow search zones and reduce non-revenue water. In building portfolios, analytics correlate occupancy, weather, and equipment cycles to predict chiller failures, allowing planned outages during low-occupancy windows. Across sectors, independent case summaries often cite maintenance cost reductions of 10 to 40 percent and downtime cuts of 20 to 50 percent when predictive routines are embedded into daily workflows.

Success depends on data discipline and human factors:

– Data quality: calibrations, timestamp synchronization, unit consistency, and handling of missing values

– Feature context: connecting sensor patterns to physical mechanisms, not just statistical oddities

– Action design: routing alerts into work orders with clear instructions, parts lists, and SLAs

– Feedback loops: labeling outcomes to retrain models and retire signals that underperform

Comparing preventive and predictive strategies highlights the trade. Preventive maintenance spreads effort on a calendar, simple to plan but indifferent to actual condition. Predictive maintenance targets assets when stress indicators cross risk thresholds, reducing intrusive work and spare-part hoarding. A balanced portfolio is typical: time-based checks for safety-critical components where failure is intolerable, and condition-based triggers for assets with measurable wear signatures. For budgeting, a straightforward ROI framing helps: estimate avoided downtime value, avoided catastrophic repair, energy efficiency gains, and crew-hour reallocations, then subtract sensor, storage, and analytics costs. Transparent assumptions build trust, especially when pilots publish both hits and misses so stakeholders can see the learning curve rather than a curated highlight reel.

Smart Infrastructure: Sensing, Connectivity, and Digital Replicas

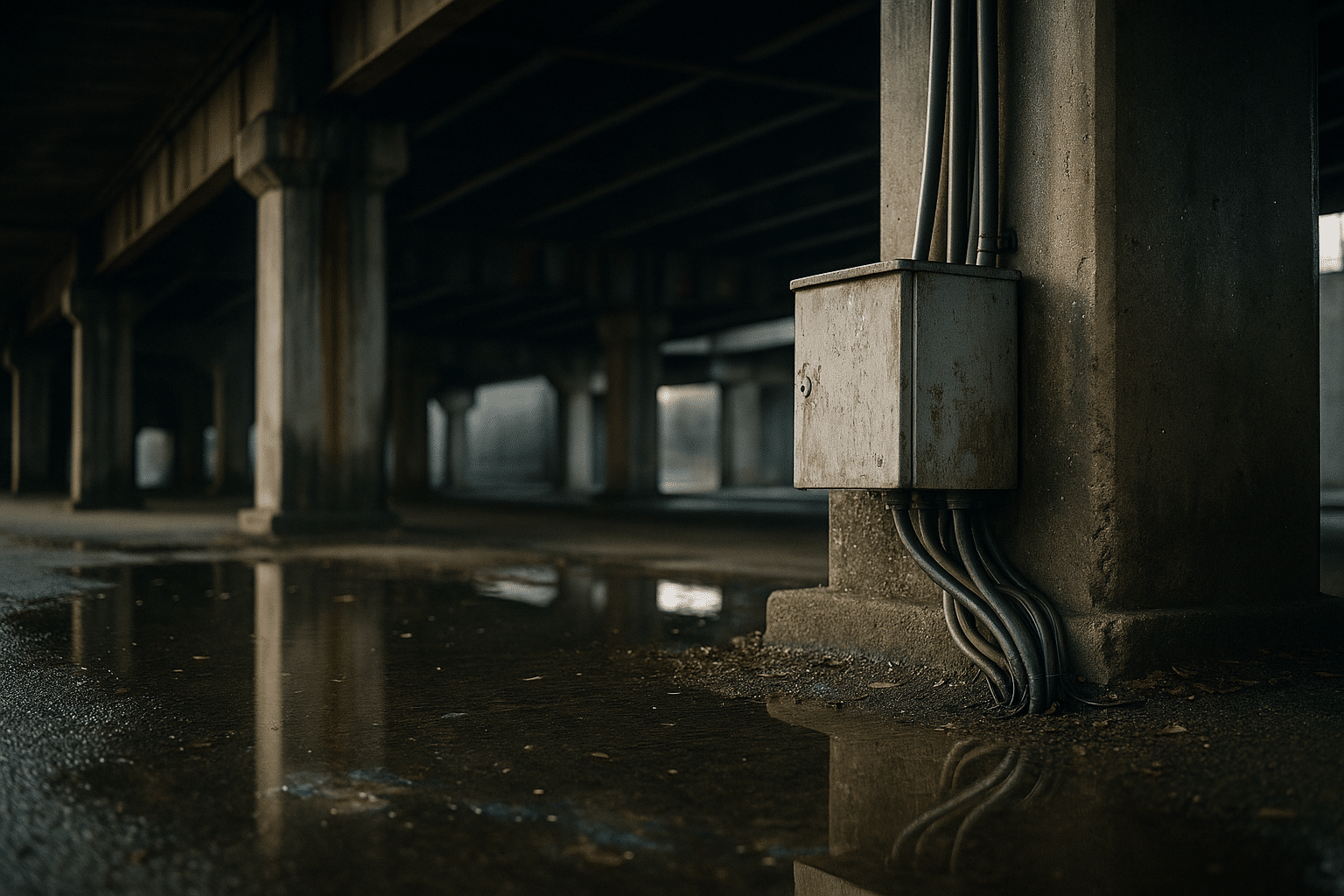

Smart infrastructure weaves together physical assets, sensors, connectivity, and software so systems can observe themselves and adapt. Picture a flood-prone underpass monitored by water-level probes and cameras, feeding alerts to control rooms where nearby signals are retimed and detours posted. Or a bridge fitted with strain gauges and accelerometers that whisper about microcracks long before they shout. Buildings, grids, roads, ports, and plants all benefit when conditions are measured continuously rather than inferred from occasional visits.

Key building blocks reinforce one another:

– Sensing: vibration, thermal, acoustic, optical, chemical, and power-quality measurements tied to known failure modes

– Connectivity: fiber for bandwidth, cellular for mobility, and low-power wide-area networks for sleepy, long-life sensors

– Edge computing: filtering, compression, and control close to the source to reduce latency and bandwidth costs

– Digital replicas: virtual models that mirror asset state, combining SCADA data, GIS context, and maintenance records

Integration is often harder than hardware. Legacy systems carry decades of tacit knowledge in naming conventions and alarm settings. A safe path starts with a canonical data model, reference IDs for assets, and a minimal set of standard message formats. Retrofit projects can proceed zone by zone, instrumenting high-priority stretches and validating data lineage before expanding. Greenfield sites may move faster, yet still benefit from explicit governance, because ad-hoc integrations become technical debt as soon as the first upgrade arrives.

Practical benefits accumulate quickly. With real-time occupancy and air-quality data, facilities teams tune ventilation to match needs, trimming energy use without compromising comfort. In road networks, pavement temperature and friction sensors inform anti-icing operations that are both timely and frugal. Port authorities monitoring crane loads and wind gusts optimize asset availability while guarding against dangerous conditions. Resilience also improves: distributed sensing and edge logic keep essentials running during connectivity hiccups, and redundant pathways reduce single points of failure. The design principle is straightforward—measure what matters, move the right data to the right place, and make state visible to both humans and software.

Conclusion: A Practical Path for Infrastructure Leaders

For executives, asset managers, and operations heads, the path forward is less about shiny tools and more about dependable routines that compound. Start with a portfolio review to identify high-impact candidates—assets with costly downtime, energy-intensive processes, or safety sensitivities. Define clear outcomes, such as fewer emergency callouts, faster restoration, or extended service intervals. Then run time-bound pilots with public success criteria and a rollback plan. The discipline to measure and iterate is worth more than any single model or gadget.

A phased plan keeps risk contained while demonstrating value:

– Assess: baseline performance, map data sources, and document critical procedures and hazards

– Pilot: deploy targeted sensing and automation with clear KPIs and operator training

– Scale: standardize data models, automate documentation, and expand to adjacent assets

– Optimize: review thresholds, prune low-value alerts, and refresh models with verified labels

Governance and workforce development are the scaffolding. Document decision logic, test fail-safe states, and align with cybersecurity and privacy expectations. Involve frontline staff early; many of the most effective alerts come from operator intuition translated into features and rules. Build fluency with simple, shared dashboards rather than one-off screens. Finally, keep the financial lens sharp: track avoided outages, energy intensity, crew utilization, and asset life extension, then reinvest part of the gains to sustain the program.

The message is optimistic but grounded. Automation provides reliability, predictive analytics adds foresight, and smart infrastructure supplies the continuous context that makes both work. Taken together, they help you deliver safer service, better compliance, and steadier budgets. If you steward roads, plants, buildings, or grids, the next step is specific and achievable: choose one process to automate, one failure mode to predict, and one asset to instrument more deeply, and let measured progress do the convincing.